Unlocking New Possibilities with AI-Enabled Workflows

Jan 19, 2024 · 4 mins read

In my last post I mentioned that products need to be AI-enabled workflows, they need to integrate and combine inputs and outputs from different sources and models creating a new multi-step process that is fully autonomous. In this post we’ll explore what that means and why we believe it’s the way forward for AI products.

With tools such as Dify, OpenAI GPTs, Character.ai, anyone can now create and share their custom prompts that adjust how the model responds to queries. AI-enabled workflows go a step further and define a multi step process that leverages AI, enabling more complex use cases.

A very simple example of an AI-enabled workflow is Retrieval-Augmented Generation (RAG). At its core, this process involves reading and storing documents in a database that is used to supplement the user query with extra context so that the model can answer it correctly.

A couple of no-code tools already provide an abstraction over this workflow so that you can create AI Assistants with private knowledge to answer specialised questions.

AI-enabled workflows can happen on demand (the whole workflow runs when someone asks a question) or split into phases (usually “preparation” beforehand and “generation” on demand). RAG workflows can work in both modalities. When you upload a pdf to an assistant and ask questions about it, it is running an on demand workflow to analyse and process the pdf to convert it to extra context for the answer. Conversely, the document processing and storing can be done in bulk when setting up the assistant, and only the generation with extra context is done on demand for each question.

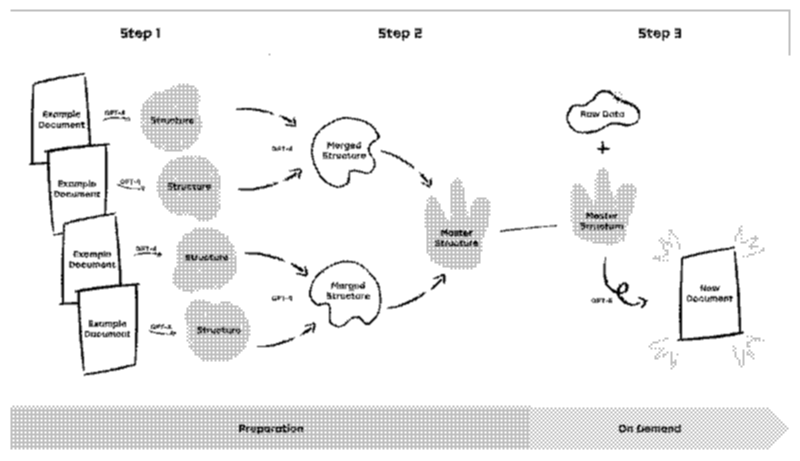

In a recent project, we leveraged AI to create a 3 step workflow (see figure below). In the first step, we process example documents using GPT-4 to extract its structure or skeleton. In the second step, we use GPT-4 to merge two structures into one recursively until we have a single master structure. Finally, on demand, we use new raw data to generate a new document using the master structure as a guide, so that GPT-4 produces content that follows an expected format and template.

The first two steps are done in the preparation phase, and can be repeated for any new source documents to further enrich the master structure. The last step is done on demand, and using the master structure in the system prompt we can ensure repeatable results without a long descriptive custom instruction.

I believe we’ll see a rise in no-code AI workflow builders, where people can create and control the flow of data between steps. Products like LastMileAI already offer something similar. So, when building custom AI-enabled products, we need to focus on complex AI-enabled workflows that won’t be easily reproducible using these no-code builders. The barrier of entry will keep getting lower, both through new builder products and AI assistants / agents that can help anyone create new products. On the other hand, the intellectual property of a lot of new AI products will be the prompt engineering (the model priming) that influences the way the model answers, whether that be through written instructions, extra context or response examples.

The potential of AI-enabled workflows is just starting to be tapped. As more modular and interoperable AI tools and platforms emerge, connecting multiple AI models together into multi-step workflows will unlock new possibilities. The complexity and customisation possible with this approach means AI products of the future will be defined less by any single underlying model, and more by the unique combinations of AI steps woven together. Companies that leverage this expertise to build customised AI product workflows stand to gain a competitive advantage - one that will only grow as modular, interoperable AI platforms become more prevalent.